The growing power of artificial intelligence (AI) in mobile applications has changed people’s approaches to technology. Leading AI software development companies are increasingly focusing on integrating AI models to drive innovation and enhance user engagement. Apps powered by AI and Machine Learning can provide loads of data analytics, anticipate user actions, and even provide a template for the user’s experience. However, implementing a trained AI model into a mobile application is a complex task that requires an efficient model as well as an understanding of platforms and the optimization itself.

This blog covers the technology of implementing trained AI models in mobile apps, the essential technologies, and how to use AI-powered mobile applications effectively.

AI-Creating Mobile Apps – AI Features in the Mobile Solutions

There are certain particularities that distinguish the integration of AI features into mobile apps from ordinary servers or web applications which needs to be understood before integrating a trained AI model. Mobile apps must have models that are small and energy-efficient for on-device operation. These models enable applications to provide AI-driven features, such as image recognition, natural language processing, and predictive text, without constant connection with the server.

For apps aiming to provide interactive, conversational experiences, developers can create AI agents using GPT technology, enhancing user engagement through natural language interactions.

OS Compliance:

Any of the models, intended for their use in mobile operating systems, should comply with the appropriate standards defined for Android or iOS platforms.

Modelling for Mobile Devices:

Mobile device models must be smaller in size and highly efficient, which can be implemented as a standard with TensorFlow Lite technology. Using AI programming languages like Python for model training, developers can optimize AI models to fit the unique constraints and capabilities of mobile platforms.

Data Privacy:

From a security perspective, performing analytical operations on the device enables less data to be sent to third parties, which is a growing concern today. To ensure a smooth integration of AI in mobile applications, businesses are encouraged to hire dedicated developers who specialize in mobile AI model optimization and deployment.

Prominent Technologies for the Applications of AI in Mobile Apps

There are a number of frameworks that allow developers to run trained AI models in mobile apps, which are different from each other in terms of effectiveness and ease of use. Mobile app machine learning empowers applications with the ability to learn from user interactions, offering smarter, context-driven features that adapt to each user.

TensorFlow Lite:

Best suited for deployment of trained AI models in Android and iOS operating systems, TensorFlow Lite provides customers with on-device machine learning capability through mobile-optimized pre-trained models. It’s efficient while having low latency and compatibility for multiple languages which makes it very versatile. Developers can leverage its lightweight and mobile-optimized models to provide on-device AI functionality with minimal latency when implementing TensorFlow Lite in mobile apps.

Core ML:

This one is from Apple as well, Core ML is available in iOS devices providing seamless addition of AI features into the iOS applications. It exposes a basic API, which allows straightforward on-device ML tasks and is compatible with models created in TensorFlow, PyTorch and other frameworks.

ONNX (Open Neural Network Exchange):

ONNX is an impressive and robust open-source ecosystem based on the concept of AI model interoperability amongst different AI frameworks and thus allows the developers to create and deploy cross-platform models across Android and iOS devices with little change needed.

Choosing the Right Framework:

While selecting any framework for an application, it is essential to keep in mind the expected operating system of the app, how complex the desired AI model is, and the performance level required from it. The proper resources could facilitate the development stage, augmenting the performance level of the application, and creating it in less time.

How to Implement a Trained AI Model in Your Mobile Application

Deploying AI models into mobile applications usually occurs through a step-by-step, orchestrated process in general. Here is a more detailed description of the key steps:

Step 1: Describe the Task for Which You Wish to Train an AI Model

Start off with a simple definition of the purpose that your AI model will serve in your app. Image classification, language processing, or predictive analytics. Well, now that you have defined the role, you will be able to then zero down into an appropriate kind and framework for it.

Step 2: Choosing or Training Your AI Model

Once you have defined your purpose, you then have to determine whether you are using a pre-trained model or a custom model:

Pre-Trained Models:

For most applications like image recognition, Pre-trained models are already ready to use. So you save time and resources.

Custom Models:

If your application demands unique functionalities, you have to train a custom model to suit your needs.

Step 3: Model Transformation and Optimization for Mobile Implementation.

Mobile devices have low computing power, hence to optimize the AI model which was optimized from the desktop or cloud server.

- Apply model quantization It means a reduction in the size of the model without losing accuracy.

- Model pruning During the pruning of unused parameters at the time of training, performance is boosted.

- Edge processing technique processing data on the device that would eliminate/minimize latency while greatly boosting security.

- Leveraging Edge AI for mobile applications ensures that data processing happens on the device, reducing dependency on external servers, improving performance, and enhancing data privacy.

- With the rise of AI-powered app development, applications can now offer predictive features, real-time data processing, and personalized recommendations directly on mobile devices.

Step 4: Model Implementation through Choosing a Suitable Framework

Once you’ve got the model ready, it’s time to integrate it into your app using a proper framework: TensorFlow Lite, Core ML, or ONNX. Integration: You would import the model, set up the appropriate input and output layers, and then implement the AI logic within the code of the app.

Step 5: Test and Optimize for Performance and User Experience

Testing is a critical part of AI model deployment. It puts the model through different conditions, tracks latency, and checks for accuracy in predicting things. There should be performance optimization when there is a problem since real-time processing must not deter users from using the product.

What are the Issues in the Integration of AI Models in Mobile Applications

Some challenges arise due to the AI model integration in mobile applications, these include:

Battery Drain and Performance:

This is because of the high demand that the AI processing puts on the mobile device which can lead to a case where the battery is drained within a short time. This can be minimized by achieving an optimized model while ensuring that the on-device and cloud balance is maintained.

Risk of Data Breach:

There are features like recommended content that are AI-based and collect user data. Such methods can be avoided by putting privacy techniques such as encryption of data and processing of data on the device itself.

Risk of Vulnerability due to Non-Standard Devices:

Since there are different mobile devices, there will also be different screen resolutions and processing capabilities. Users consider compatibility towards different working devices. It requires much time for tests and most probably some revisions for models that must fit all devices.

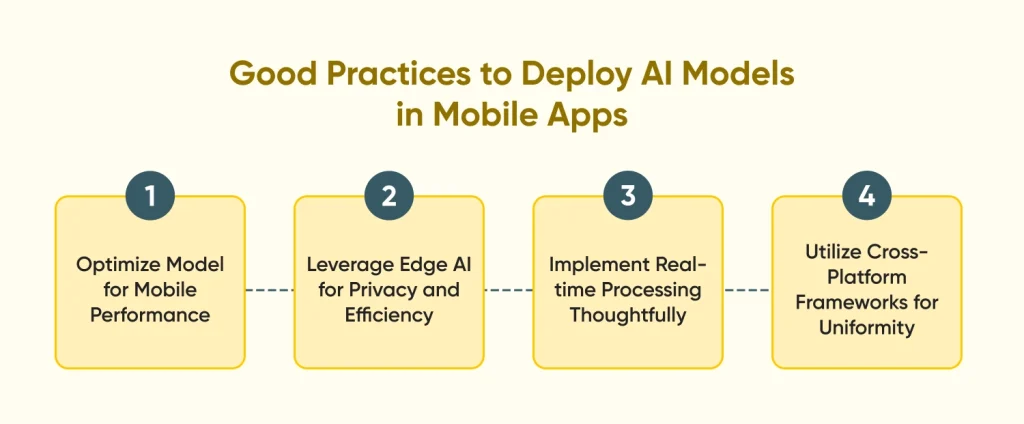

Good Practices to Deploy AI Models in Mobile Apps

In order to get the best from your AI model and enhance the functionality of your app, here are some good practices:

Optimize Model for Mobile Performance

The model should not be resource-intensive and must run on mobile devices. Techniques such as quantization and pruning can help minimize the size of the model and speed up execution.

Leverage Edge AI for Privacy and Efficiency

This technique is referred to as edge AI or on-device AI processing and has become so popular for mobile applications. Edge AI processes data on the device, hence reducing its reliance on external servers; which is necessary for performance and privacy.

Implement real-time processing thoughtfully

Real-time AI features enhance usability but must be implemented carefully so as not to delay the application. Refine the code to ensure that the app adjusts response times faster, particularly where tasks demand immediate feedback, such as voice recognition or camera-based functions.

Utilize Cross-Platform Frameworks for Uniformity

The cross-platform frameworks, such as ONNX, actually simplify the deployment on both Android and iOS by using the same AI model for Android as well as iOS. This way, it ensures that users get to be presented with a consistent experience, cut down on development time, and are using an application that is more aligned with the requirements of its audience.

Best Examples for Implementing AI Models on Mobile Devices

Several popular apps utilize AI models to provide enhanced capabilities:

Snapchat:

Its image recognition and face filters use AI algorithms to identify features on the faces of users to automatically create an effect in real-time.

Google Translate uses on-device AI to identify and translate words from pictures and does it all without requiring access to your connection.

TikTok:

TikTok uses a recommendation algorithm that studies the interactions of users as well as their preferences for generating video feeds based on predictive models.

Each of the above examples puts forth the power of well-integrated AI, allowing for processing in real-time as well as smart recommendations that can make the value and experience of the app richer.

Conclusion

By implementing these AI-powered models, companies can build smarter enterprises with AI, through a mobile application, enabling more data-driven decisions and seamless automation. Each step-right framework for optimization on mobile devices takes its toll but makes AI experiences seamless and powerful. By applying best practices, optimizing for performance, and prioritizing user privacy, smarter, AI-powered mobile applications are sure to meet and exceed expectations.

Frequently Asked Questions

How can I implement a trained AI model in my mobile app?

You may add a trained AI model by selecting an appropriate framework, such as TensorFlow Lite or Core ML, to tailor it for mobile functionality and then embed it into the code of your app to bring in real-time AI features.

What are the best frameworks for integrating AI models into mobile apps?

TensorFlow Lite, Core ML, and PyTorch Mobile top the list of best choices for their specific tools and optimized versions of both Android and iOS apps.

Can I use the same AI model on both Android and iOS platforms?

Yes, it is possible to share an AI model across platforms using frameworks such as TensorFlow Lite and ONNX, which enables cross-platform deployment, thus using almost the same models on Android and iOS with minimal adjustments.

What are the best practices for implementing real-time AI in mobile applications?

Best practices in implementing Real-time AI: This includes optimizing the model for battery efficiency and using edge AI for privacy purposes, achieving the optimal balance between on-device and cloud processing.