Large language models have revolutionized a variety of sectors, particularly those focused on customer service. Creating an NSFW AI chatbots, quicker content production, and enhanced data analysis, has completely transformed customer service. Choosing the best model has become crucial for businesses since so many of them provide distinctive features.

You can avoid spending more money and getting less than ideal-results if you select the appropriate LLM model. However, making the wrong choice might lead to unnecessary resource depletion in addition to costly capital expenditures.

According to Statista, 26% of global businesses chose to use embedding models in their commercial deployment. Choosing the best LLM for your project, discuss performance measures and business alignment, and contrasting popular models. Let us dive in to find the best models for your needs.

LLM Evaluation Framework: What Is It?

The simplified technique of assessing the models’ performance and efficacy in actual situations is known as LLM evaluation. These models comprehend the user’s inquiries and provide efficient answers to activities such as question answering, translation, video summary, and text production.

To find out what is effective and what needs to be improved, the developers thoroughly evaluate these models. This enables you to work by the future of LLM model development and helps you reduce the risks of biased or misleading content. Therefore, focusing on AI chatbot development alone is insufficient for you as an AI researcher, developer, data scientist, or business owner; you also need to think about LLM evaluation to guarantee their performance, correctness, and dependability.

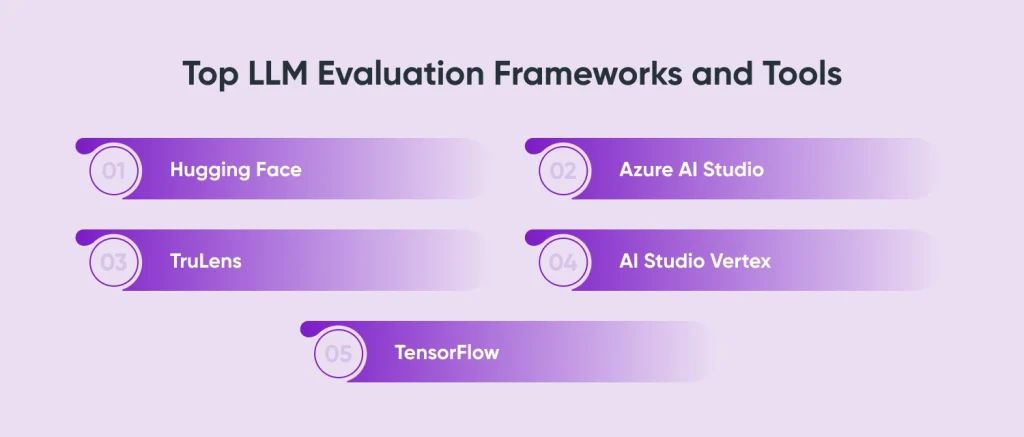

What are the Top LLM Evaluation Frameworks and Tools

As AI continues to advance, the industry has seen the introduction of numerous new LLM frameworks and tools. These cutting-edge LLM frameworks and tools have the greatest features and capabilities, including model training and performance, dependability, and fairness monitoring.

Selecting the best LLM evaluation framework gets challenging with so many available. The most often used LLM evaluation frameworks are mentioned here, though not in any particular sequence.

Hugging Face

Hugging Face includes the databases, pre-trained models, and building pieces needed to create NLP applications. Think of it like a machine learning GitHub. For what reason? Developers and researchers find great resonance in the machine learning development services and framework’s vast library and other community-contributed resources. They may locate, share, work together, and experiment with the LLM models using its user-friendly, contemporary interface.

Azure AI Studio

Some of the greatest enterprise-grade tools, integrated analytics, and adaptable assessment procedures are included in Microsoft’s Azure AI Studio. These Azure AI services provide a comprehensive environment that may be used to develop, implement, assess, and oversee Gen AI applications. Anyone working with the Microsoft ecosystem who wants a framework that offers improved security and performance is the greatest candidate.

TruLens

An open-source program called TruLens is well-known for emphasizing interpretability and openness in LLM assessments. To improve trust and adherence to privacy regulations, it is a great tool for developers and stakeholders to comprehend how LLMs arrived at this judgment.

The tool assesses the LLM-based apps’ quality by taking into account a number of feedback functions, including safety, context relevance, groundedness, etc. Internally designed feedback features assess the caliber of inputs, outputs, and immediate outcomes. The tool works well for use cases such as question answers, summarizing content, agent-based applications, and augmented-generated cases.

Use cases including question answering, summarization, retrieval-augmented generation, and agent-based applications are all good fits for the tool.

AI Studio Vertex

A cloud-based console tool called Vertex AI Studio is useful for creating, honing, and implementing AI models in addition to LLMs. To handle tasks that meet the requirements of the program, you can use the tool to test example prompts, create your prompts, and alter the basic models. It even lets you link third-party services to LLM models.

This tool does not offer pre-deployment support, it only allows the user to scale, manage, and monitor the models that help with end-to-end MLOps capabilities managed by Vertex AI’s API infrastructure.

Furthermore, with the aid of Vertex AI’s end-to-end MLOps capabilities and a fully managed API architecture, the solution not only provides support till launch but also enables users to grow, administer, and monitor the foundational models.

TensorFlow

TensorFlow is an open-source LLM evaluation framework for evaluating machine learning LLM The internal research and production team at Google created the library. TensorFlow includes resources, libraries, and flexible tools that assess LLM models efficiently. These resources are also widely used to develop applications that are ready for production and to make research breakthroughs.

Hire LLM developers to optimize and deploy LLM solutions that are customized to your company’s requirements.

What Challenges Arise in LLM Evaluation?

The following is a list of typical issues that companies and developers go with when assessing an LLM.

Subjectivity in Human Assessment

One of the most popular techniques for assessing LLMs is human evaluation. This approach, however, introduces several biases and interpretations. Relevance, coherence, and fluency are not equally valued by all assessors. As a result, what one user considers ideal may be deemed inadequate by another.

Additionally, because their evaluation criteria are inadequate, various users will hold differing perspectives about the same output.

Fairness in Automated Assessments

The biases that already exist in their data are typically maintained by automatic LLM evaluation metrics. These biases can take many different forms and have an impact on the LLM’s outcome.

Actions by Adversaries

Certain inputs can fool LLMs into acting in a particular way. As a result, they are vulnerable to hostile attacks such as data position or model modification. These assaults impede their comprehension, producing inaccurate, prejudiced, or damaging results.

The capabilities and functionalities needed to identify these attacks are still absent from the widely used evaluation techniques. The research phase is still ongoing for a comprehensive evaluation. Furthermore, a high-end generative AI solutions company can help you with GenAI models face ethical or legal problems, which would ultimately impact the LLMs employed in specific industries.

Problems with Scalability

LLMs have the ability to handle a wide variety of challenging activities over time. LLMs constantly need a large amount of reference material to perform these jobs, yet gathering this large amount of data is difficult. Furthermore, insufficient data has an impact on assessment and results in a restricted examination of the model’s functionality.

Absence of Measures of Diversity

The performance of LLMs across various populations, cultures, and languages is typically not adequately assessed by the evaluation systems now in use. When producing output, these LLMs give priority to accuracy and relevance while ignoring other crucial elements like innovation and diversity. Therefore, it is challenging to argue that models give their finest performances.

The Best Practices for Assessing LLM Models

Verifying the figures is only one aspect of evaluating LLM models. Let’s examine a few well-liked tactics that can improve your LLM evaluation.

Establishing Specific Goals

Establish specific objectives for LLM assessments. What goals do you hope to accomplish with your LLM? Accurate facts, prompt action, situational analysis, inventiveness, etc. Setting a goal makes it easier to focus your efforts and track your progress over time.

Equilibrium between Qualitative and Quantitative Analysis

It’s crucial to have multiple sources of support. You may get a complete view of the LLM’s performance by combining numerical data with human assessment. You can obtain the numerical data using quantitative analysis.

Consider Your Possible Viewers

Specify who will utilize the results of your LLM model. Tailor the rating criteria to the requirements and traits of your intended audience. This will demonstrate that your model meets the needs of the appropriate users and is pertinent.

Get Real-world Analysis

Perform LLM model evaluation for overall efficacy, user satisfaction, and adaptability to deal with unforeseen circumstances by testing it in real-world scenarios. For example, to get the full performance insights of the LLMs, think about integrating either domain-specific or industry-specific LLM performance metrics. This provides insightful information about LLM’s performance, allowing you to make the required modifications.

Making Use of LLMops

Make use of the LLM operations (LLMops), a subset of MLOps that streamlines all of your LLM development and enhancements. It provides some of the greatest tools and procedures to make your work of ongoing assessment, performance tracking, and regular improvement easier. You may maintain your LLM metrics model current with the evolving requirements by following this procedure.

A Variety of Reference Information

Make use of large reference datasets that include a variety of viewpoints, topics, styles, and situations. Think about including examples from a variety of fields, languages, and cultural inclinations. This type of thorough information enables you to assess your LLM’s skills in-depth and identify any biases in their performance.

Conclusion

Selecting the best evaluation metrics for LLM model may seem complicated at first, but it all comes down to determining what your company needs. Do you prioritize scalability, accuracy, or something more specific to your sector? By taking the time to investigate and align these priorities with the appropriate model, you can be sure that you’re selecting a collaborator rather than merely a tool to solve issues and spur innovation. Finding a model that fits your objectives and your particular circumstances is more important than locating the most sophisticated one.

Create AI agents using GPT from any industry-specific technologies to versatile, all-purpose models like GPT-4. The secret is to carefully consider the best LLM development company to examine the model’s suitability for your tasks, take prices into account, and make sure it works well with your existing systems. With the right approach, you can leverage an LLM to enhance operations and grow your business.

Frequently Asked Questions

How well does the LLM integrate with other tools and platforms?

One of its best features is how easily it integrates with various datasets, APIs, and even other AI models. This opens up several possibilities for creating diverse and scalable applications.

How to choose LLM models?

To guarantee compatibility with your unique needs and goals, selecting the appropriate LLM model for your company necessitates carefully weighing several aspects, including performance indicators, scalability, resource requirements, customization opportunities, and ethical issues.

How to evaluate LLM prompts?

A thorough set of evaluation criteria is necessary to determine the efficacy of LLM prompts. These standards ought to cover a range of aspects of the model’s output, such as relevance and accuracy.

What are the core capabilities of the LLM?

LLMs are adaptable as they are trained on a variety of data. By applying their acquired knowledge, they can carry out a variety of tasks, including sentiment analysis and text generation. By making adjustments, they can be modified.