AI adoption is rapidly increasing in all industries for several use cases. In terms of natural language technologies, the question generally is – is it better to use NLP approaches or invest in LLM technologies? LLM vs NLP is an important discussion to identify which technology is most ideal for your specific project requirements.

Both AI technologies offer powerful capabilities for understanding, processing, generating and interacting with human language. However they take different approaches and serve different purposes depending on the project complexity, requirements and applications.

This comprehensive guide on AI LLM vs NLP examines the distinctive features, applications, key differences between NLP and LLMs, cost comparison and integration possibilities to help organizations make informed decisions for their AI strategy.

What is NLP?

NLP is a special branch or subset of artificial intelligence that helps machines understand, interpret and generate human language for useful purposes. Traditional NLP systems use rule-based methods and statistical models combined with machine learning algorithms for text and speech data processing.

NLP has been an important part of AI software development services for decades, serving as the foundation for many enterprise language solutions. It covers various techniques including tokenization, part-of-speech tagging, named entity recognition, sentiment analysis, and more fundamental language processing tasks.

Distinctive Features of NLP

Traditional NLP systems have many key features that differentiate them from modern LLM methods. They are:

- Task-specific design: NLP systems are typically built for specific language tasks like sentiment analysis, entity extraction, or text classification.

- Modular architecture: Different components handle distinct aspects of language processing, forming a pipeline of specialized tools.

- Transparent reasoning: Rule-based NLP systems provide clear explanations of how they arrive at conclusions.

- Lightweight implementation: Many NLP solutions require relatively modest computational resources compared to modern LLMs.

- Domain customization: NLP systems can be effectively tailored to specific domains with relatively small amounts of annotated data.

- Established methodologies: Backed by decades of research and proven implementation strategies for enterprise applications.

Key NLP Applications

NLP technologies have been successfully deployed across numerous industries and use cases:

NLP in Healthcare

Healthcare organizations implement NLP to extract relevant information from clinical notes, automate medical coding, improve clinical documentation, and enhance patient care through better data utilization.

NLP in healthcare can also be used for identifying medical entities, generating structured data from raw medical text data and standardizing terminologies.

Customer Service

NLPs also are useful for building smart chatbots and virtual assistants capable of handling routine customer inquiries, categorizing support tickets and analyzing customer feedback with improved accuracy and reduced operational costs.

Market Intelligence

NLP is also a great tool for marketers to analyze brand sentiment, competitor activities and finding out emerging market trends by processing vast data from online resources, social media posts and relevant articles.

Regulatory Compliance

Different industries have different compliance requirements and NLP can be a great tool for scanning documents to identify compliance issues, flag possible violations and automate certain aspects of the reporting process.

What is LLM?

Large Language Models are the most advanced AI evolution in natural language processing. They are based on massive neural networks modes that are trained on massive text-based datasets. They are able to take user input regarding what the user wants to learn or write, and provide contextually relevant text without needing specific task oriented training.

LLMs like GPT-4, Claude, and others are fundamentally transformer-based models that have been trained on unprecedented amounts of text data. Their generative capabilities have revolutionized what’s possible in AI-driven communication, creating new opportunities for generative AI development services across industries.

Distinctive Features of LLM

LLMs offer several groundbreaking AI capabilities that differentiate them from traditional NLP approaches:

- Few-shot learning: LLMs can adapt to new tasks with minimal examples, often requiring just a few demonstrations to perform effectively.

- Generative power: They excel at producing high-quality, contextually appropriate text across diverse domains and styles.

- Multi-task performance: A single LLM can handle multiple language tasks without specialized training for each function.

- Emergent abilities: As LLMs scale in size, they develop capabilities that weren’t explicitly programmed, such as reasoning and problem-solving.

- Natural interactions: They support conversational interfaces that feel more natural and human-like than previous technologies.

- Zero-shot capabilities: Modern LLMs can perform tasks they weren’t explicitly trained on by following instructions.

- Conceptual understanding: LLMs demonstrate a broad understanding of concepts beyond simple pattern recognition.

Key LLM Applications

The versatility of LLMs has enabled a new generation of AI applications:

Content Creation

LLMs can be used for creating blogs, marketing copies, creative content, product descriptions, SEO-driven content, mailing campaigns and all other content requirements. With specific instructions and set of user-defined rules, LLMs can be personalized for maintaining brand tone and messaging consistency across all their channels.

Advanced Conversational Agents

LLM powered chatbots take NLP driven chatbots capabilities and extend it with the capacity to hold more open-ended discussions with context awareness and conversational AI capabilities. It can help in client issue escalation, problem troubleshooting solution finding and many other such use cases.

Code Generation and Assistance

LLMs are also used by AI developers to develop code snippets, debug existing code, and provide programming assistance. This significantly accelerates software development cycles. For instance, ChatGPT allows developers to get a code preview of the code it comes up with for any specific coding task provided to it.

Knowledge Management

Organizations implement LLMs to gather information from internal documents, create summaries of complex materials, and answer employee questions about company knowledge.

Personalized Education

LLMs create customized learning materials, provide tutoring in various subjects, and adapt explanations based on student feedback and comprehension levels. To learn more about AI in education or get intelligent education software development services, you can look for AI development companies with expertise in education and other sectors.

NLP vs LLM: A Comparative Tabular Analysis

Understanding the fundamental differences between natural language processing and LLM approaches is crucial for developing an effective AI strategy.

| Aspect | Traditional NLP | Large Language Models (LLM) |

| Scope | Uses specialized components for tasks like named entity recognition, sentiment analysis, or text classification in a pipeline architecture. | Offers general-purpose language capabilities in a single system, adaptable through prompting rather than architectural changes. |

| Training Data | Requires carefully annotated, task-specific datasets, with significant human effort for new tasks or domains. | Pre-trained on massive corpora containing billions of words, with broad generalization capabilities, though fine-tuning may be needed. |

| Model Complexity | Ranges from rule-based approaches to complex statistical models but remains interpretable and manageable. | Contains hundreds of billions of parameters, requiring specialized hardware and making internal mechanisms harder to interpret. |

| Performance | Achieves high accuracy in well-defined tasks with structured evaluation metrics, excelling in consistent language patterns. | Handles diverse tasks without retraining but may lack precision in highly technical or regulated domains. |

| Use Cases | Ideal for specific tasks in regulated environments where explainability, consistency, and precision are crucial. Suitable when computational resources are limited. | Best for flexible applications requiring creativity, natural conversation, and adaptation to new contexts without retraining. |

| Examples | – Google BERT – SpaCy – NLTK – IBM Watson NLP – Stanford CoreNLP – FastText | – ChatGPT – Claude AI – Gemini (formerly Bard) – LLaMA – Perplexity – DeepMind |

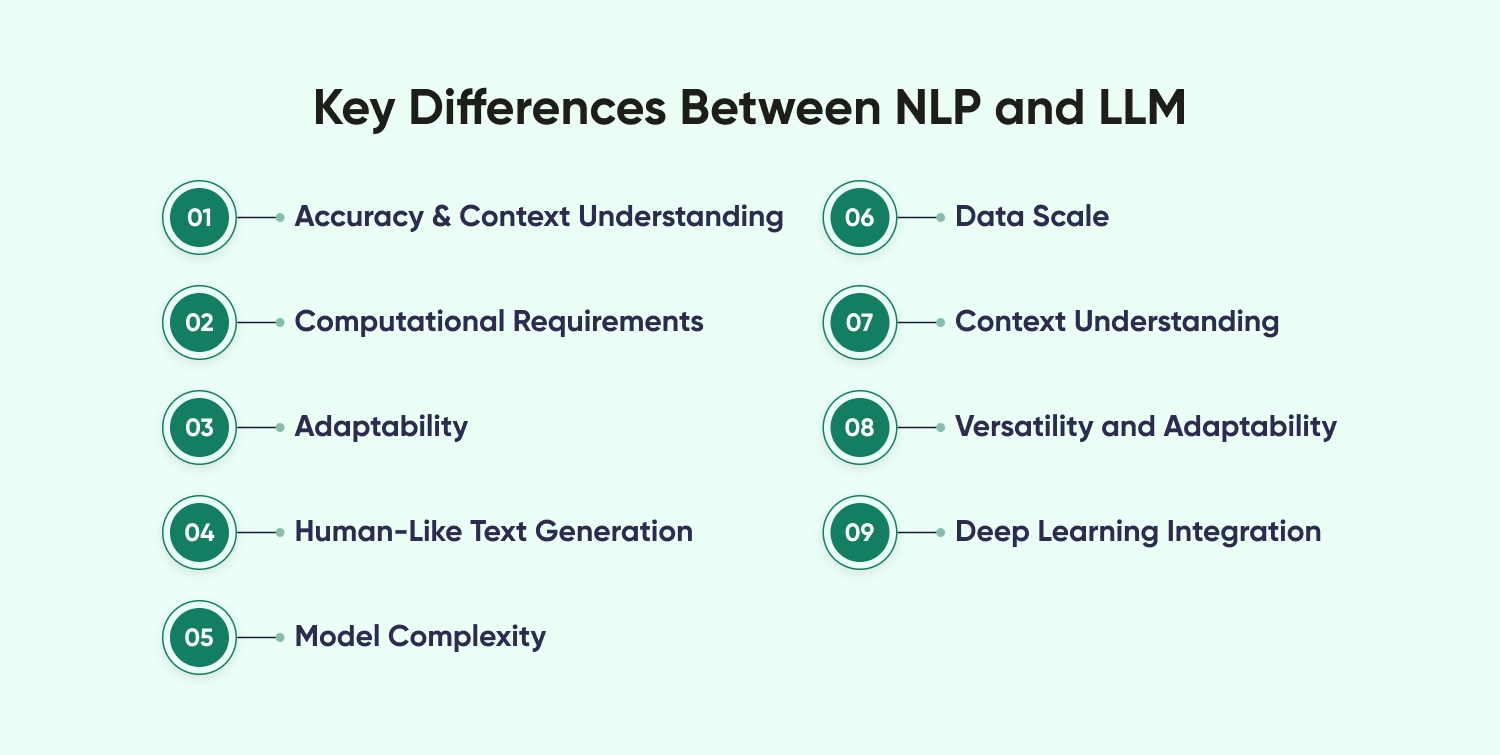

Key Differences Between NLP and LLM

Accuracy & Context Understanding

While traditional systems like NLP can achieve high accuracy for specific tasks, they often struggle with ambiguity and contextual nuances, typically processing shorter text segments with limited context windows.

The newer generation of AI driven LLM models demonstrates superior contextual understanding, maintaining coherence over longer passages and capturing subtle nuances in language while considering broader context for more relevant and coherent outputs to complex queries.

Computational Requirements

NLP pipelines can easily run on basic hardware without any extra computational requirements. This makes integrating NLP more accessible for all organizationals without worrying about resource limitations. Such low-demanding AI based solutions also enable easy deployment with minimal latency.

LLMs or Generative AI models demand higher computational resources as it needs to train on large datasets and infer the context of the vast data provided also. This pushes the need for accelerated or additional GPUs and TPUs that increases the initial investment and overall operational costs.

Adaptability

Adapting NLP for different industries needs the developers to collect and annotate new training data, modifying rules, or retaining components. This is a very tedious and labour-intensive process as compared to LLM models that can adapt to new domains with prompt engineering or few-short learning methodologies.

Here the AI prompt engineer only needs to provide a set of instructions or examples rather than providing an extensive manual training guide.

Human-Like Text Generation

Most traditional NLP systems generate text using templates or predefined patterns, resulting in outputs that may feel mechanical or repetitive with generation capabilities typically limited to specific formats.

Advanced LLM models produce remarkably human-like text with natural variation in style, tone, and structure, mimicking different writing styles, adapting to formal or casual registers, and generating creative content that appears authored by humans.

Model Complexity

Traditional NLP approaches often use simpler and controlled models for each component in the pipeline, with modular designs that eases debugging and targeted improvements to specific aspects.

Today’s most advanced language learning models represent some of the most complex AI systems ever built, with architectures containing billions of parameters. This enables LLMs to come up with impressive answers or solutions but at the cost of high complexity and difficulty in comprehending the complex answers it provides.

Data Scale

Natural language processing systems can function effectively with modest amounts of task-specific data, making them viable for niche domains with limited available text resources.

Language Learning Models require training on unprecedented volumes of text data, often measuring in the trillions of tokens, with performance typically improving with increased data scale while creating significant barriers to entry for organizations wanting to train their own models.

Context Understanding

Traditional approaches process text with limited context windows, often analyzing sentences or paragraphs in isolation and struggling with references across longer passages or documents.

Modern LLM architectures need to manage context over a chain of messages, that improves its conversational AI capabilities. It is able to provide context-aware relevant answers providing more value to the users who are researching a topic in detail.

Versatility and Adaptability

Natural Language Processing solutions are great for specific tasks they are configured for but would require major rework for new applications. This increases the resource dependency and time taken for developing specific NLP tools for each use case. Whereas, a single AI-powered LLM system is able to perform various tasks with prompt engineering services. From summarization, to translation to converting content into tabular and bullet point formats, LLMs are more flexible in providing more value.

Deep Learning Integration

While modern language processing increasingly incorporates deep learning components, many traditional systems still rely partially on statistical methods, rule-based approaches, and classical machine learning.

The latest LLM solutions consist of fundamentally deep learning systems, typically based on transformer architectures that have revolutionized natural language processing through their ability to process text in parallel and capture long-range dependencies.

How to Choose Between NLP and LLM

After understanding the difference between NLP and LLM it is important to select the right approach for your organization depends on several key factors:

| Factor | Choose NLP When | Choose LLM When |

| Project Scope | The task is well-defined and structured, such as document classification or entity extraction. | The task involves multiple language-based activities or requires flexible, open-ended responses. |

| Budget Consideration | Cost constraints exist, and standard hardware needs to be used. | There is enough budget to invest in cloud services or high-end computing infrastructure. |

| Computational Resources | Limited access to high-end GPUs or cloud processing. | The organization has access to powerful computing resources or cloud-based AI services. |

| Data Availability | The task relies on structured or domain-specific datasets. | The project can leverage diverse, large-scale text data for learning and adaptation. |

| Privacy Importance | Sensitive data must remain on-premises, and strict data governance is needed. | Cloud-based deployment or third-party AI services are acceptable. |

AI LLM vs NLP: Cost Comparison

Understanding the financial implications of NLP and LLM models is crucial for strategic planning and finding which one is more ideal for your business goals.

Cost of Implementing NLP

Traditional NLP implementations typically involve the following cost factors:

- Development costs: Engineering time for building and connecting specialized components

- Data annotation: Human effort required to create training datasets for supervised learning approaches

- Maintenance: Ongoing updates to rules, models, and components as language patterns or requirements evolve

- Infrastructure: Relatively modest computing resources for training and deployment

- Scaling: Linear cost increases as processing volume grows

NLP solutions generally feature more predictable costs and lower initial investment, making them accessible to organizations of various sizes. The primary expenses often relate to human expertise rather than computing infrastructure.

Cost of Implementing LLM

LLM implementation costs include:

- Model access: Subscription fees for commercial LLM APIs or significant infrastructure costs for self-hosting

- Computing resources: Substantial GPU/TPU requirements for training, fine-tuning, or inference

- Expertise: Specialized knowledge in prompt engineering and LLM optimization

- Integration: Systems to connect LLMs with existing business processes and data sources

- Inference costs: Ongoing expenses that scale with usage, particularly for cloud-based solutions

While LLMs require higher initial investment and operational expenses, they can provide cost efficiencies through their versatility and reduced need for task-specific development. For organizations handling multiple language tasks, the consolidated capabilities of LLMs may offer better long-term value despite higher upfront costs.

How to Integrate NLP and LLM?

Rather than viewing NLP and LLM as competing approaches, forward-thinking organizations are finding ways to leverage the strengths of both technologies in complementary architectures:

Hybrid Systems

Combine traditional NLP components for structured, high-precision tasks with LLMs for flexibility and natural language generation. For example, use specialized NLP for entity extraction and data normalization, then employ LLMs for generating insights or conversational interfaces based on that structured data.

LLM Augmentation

Enhance LLM capabilities with specialized NLP tools for tasks where precision is crucial. This approach uses traditional components to verify or augment LLM outputs, particularly in regulated domains like healthcare or finance.

Retrieval-Augmented Generation (RAG)

Another approach can be to integrate NLP techniques for improved information retrieval from enterprise knowledge bases and using LLMs to present that data in natural language and rich-content formats. This helps keep the content authentic with NLPs rule based system and benefiting from LLM’s communication capabilities.

Progressive Implementation

Start with targeted NLP solutions for immediate business needs, then selectively integrate LLM capabilities as requirements evolve and the technology matures. This strategy allows organizations to balance innovation with practical constraints.

Domain Adaptation

Use specialized NLP to process domain-specific terminology and concepts, then leverage LLMs for broader language tasks that benefit from their general knowledge. This combination is particularly effective in technical fields with specialized vocabularies.

Future of NLP & LLM: Evolving Trends and Challenges

As organizations build smarter enterprises with AI, several key trends are shaping the future of language technologies:

Convergence of Approaches

The distinction between traditional natural language processing vs large language models is gradually disappearing as developers incorporate elements of both technologies. Future systems will likely feature modular architectures with LLM components working alongside specialized NLP tools.

Specialized LLMs

Smaller, domain-specific LLMs trained for particular industries or applications will offer improved efficiency and performance for targeted use cases, making advanced language capabilities more accessible to a broader range of organizations.

Enhanced Interpretability

Further research is being carried out to improve LLM transparency and interpretability. This addresses a key limitation compared to traditional NLP approaches. It will be crucial for adoption in regulated industries.

Multimodal Integration

Future language systems will increasingly integrate text processing with other modalities like vision, audio, and structured data, creating more comprehensive AI solutions that better reflect human communication patterns.

Edge Deployment

Compact versions of LLMs capable of running on edge devices will expand the range of possible applications, particularly for scenarios requiring offline operation or stricter data privacy.

LLM Evaluation Frameworks

More sophisticated methodologies for evaluating LLM performance across dimensions like accuracy, safety, and fairness will emerge with LLM evaluation frameworks, helping organizations make informed decisions about implementing these technologies.

Ethical and Regulatory Development

Both NLP and LLM fields will continue navigating evolving regulatory landscapes regarding data privacy, bias mitigation, and appropriate use cases, shaping how these technologies can be deployed.

Final Thoughts on NLP Models vs LLM Models

The choice between NLP and LLM is not as black and white. You need professional AI strategies and a roadmap to achieve your desired outcomes. For many businesses this strategy will incorporate elements of both, using their complementary strengths. By understanding the fundamental differences between NLP and LLM technologies, firms can make informed decisions about when to hire AI developers specializing in each approach.

FAQs on NLP vs LLM

Is LLM Better Than NLP?

In the AI LLM vs NLP comparison, LLM can be considered an evolution of traditional NLP techniques. Both have their individual purposes, LLMs are ideal for using deep learning to create more context-aware accurate responses, whereas NLP are ideal for predefined structures or tasks.

Is LLM Generative AI or NLP?

LLMs fall under the umbrella of generative AI services that come under the broader category of NLP. It uses advanced techniques to generate human-like text that can majorly improve AI-driven tasks such as customer interactions and content generations.

What Distinguishes Large Language Models From Traditional Natural Language Processing Models?

The primary difference between NLP and LLM is in the scale and approach to data processing. Traditional NLP models rely on rule-based or statistical methods. In contrast, LLMs use large datasets and deep learning models, offering far more flexibility. With reliable Generative AI Development Services, organizations can use LLMs to create highly scalable and intelligent systems for a range of use cases.

What Are the Advantages of Using LLMs Over Traditional NLP Models?

LLM provides more advanced context-aware solutions whereas NLP is more useful for specific pre-defined tasks. If we compare LLM vs NLP use cases, LLM stands as a clear winner for tasks that require deep understanding, such as content creation, translation, and summarization. Businesses looking to use the power of LLMs can rely on CMARIX AI software development services to build applications that deliver high-quality outputs with minimal manual intervention.

What Industries Benefit the Most From NLP and LLM?

NLP in Healthcare is just one example where both NLP and LLMs can be highly effective. Various industries like fintech education and customer service can integrate NLP and LLM for bespoke AI driven software solutions. Whether you need to integrate the NLP or LLM framework, you should hire AI developers that help you with a strategic roadmap and development process.