Node.js is a powerful JavaScript runtime environment that has revolutionized the way developers handle input and output (I/O) operations. One of the key features that make Node.js stand out is its ability to process large amounts of data efficiently through Node streams. Node streams are a powerful concept that enables developers to handle data flow, especially large data sets, in a more efficient manner.

Streams are essentially a sequence of data that is processed sequentially, allowing developers to read and process data piece by piece or in chunks, instead of reading all the data into memory at once. There are four types of Node streams: Readable streams, Writable streams, Duplex streams, and Transform streams.

In this article, we will explore everything you need to know about Node.js streams, including their advantages, types, and techniques such as piping and stream chaining. With this knowledge, you will be able to use Node.js streams to handle I/O operations efficiently in your projects.

What are Streams in Node Js?

In Node.js, streams are a powerful concept that enables developers to handle data flow efficiently, especially when dealing with large data sets. Streams can be thought of as a sequence of data that is processed sequentially.

The main advantage of using streams in Node.js development services is that they allow data to be processed piece by piece, rather than reading and processing all the data at once, which can be memory-intensive and slow. Streams come in four different types: Readable streams, Writable streams, Duplex streams, and Transform streams.

Readable streams allow data to be read from a source, while Writable streams allow data to be written to a destination. Duplex streams allow both reading and writing of data, while Transform streams allow data to be modified as it flows through the stream. Overall, Node.js streams are a powerful feature that can help developers handle I/O operations efficiently in their projects, making them an essential part of Node Js development services.

Benefits of using Node.JS

Node.js is a powerful open-source JavaScript runtime environment that has many benefits for developers. One of the main advantages of Node.js is that it is based on JavaScript, which is a widely-used language, making it easy for developers to learn and use. Additionally, Node.js is scalable, fast, and efficient, making it a great choice for building real-time applications.

Node.js also has a large and active community that creates many helpful packages and tools. Another advantage of Node.js is that it can be used on the server side, making it possible to use the same language and tools for both client-side and server-side development. For these reasons, it is a great choice for businesses looking to hire Node.js developers to build high-performance, scalable applications.

Types of Node Streams

Node.js streams are a powerful feature that allows developers to handle data flow efficiently, especially when dealing with large data sets. There are four types of streams in Node.js: Readable streams, Writable streams, Duplex streams, and Transform streams. In this section, we will discuss each of these stream types in more detail.

1. Readable Streams

These are used for reading data from a source, such as a file or network connection. Readable streams can be consumed in two ways: by listening to data events or by using the readable.read() method. The data events are emitted whenever there is data available to be read from the stream, and the readable.read() method can be used to read a specified number of bytes from the stream.

2. Writable Streams

Writable streams are used for writing data to a destination, such as a file or network connection. best Writable streams can be consumed by writing data to them using the write() method. Additionally, writable streams have a method called end() that is used to signal the end of data to be written to the stream.

3. Duplex Streams

Duplex streams are streams that allow both reading and writing of data. They are essentially a combination of Readable and Writable streams. Duplex streams are commonly used for network communication and other scenarios where both reading and writing are necessary.

4. Transform Streams

Transform streams are a special type of Duplex stream that allows data to be modified as it flows through the stream. They can be used for tasks such as compression, encryption, or data manipulation. Transform streams have a writable side and a readable side, allowing data to be modified as it is being read from the source.

In addition to these four main types of streams, there are also other types of streams that are built on top of these basic stream types, such as object mode streams and pause/resume streams.

1. Object Mode Streams:

Object mode streams are a type of Readable or Writable stream that allows objects to be read or written instead of just raw data. There can be useful for scenarios where complex data structures need to be transferred, such as with JSON data.

2. Pause/Resume Streams:

Pause/Resume streams are a type of Readable stream that allows data to be paused and resumed in Node.js. This can be useful for scenarios where data needs to be processed in batches or where the processing of data needs to be controlled, and you can create readable stream in Node.js for this purpose.

Understanding the types of streams in Node.js is essential for building efficient and scalable applications. Whether you are reading data from a source, writing data to a destination, or transforming data as it flows through the stream, there is a stream type in Node.js that can handle the job. With this knowledge, developers can make the most of the powerful features that Node.js streams provide.

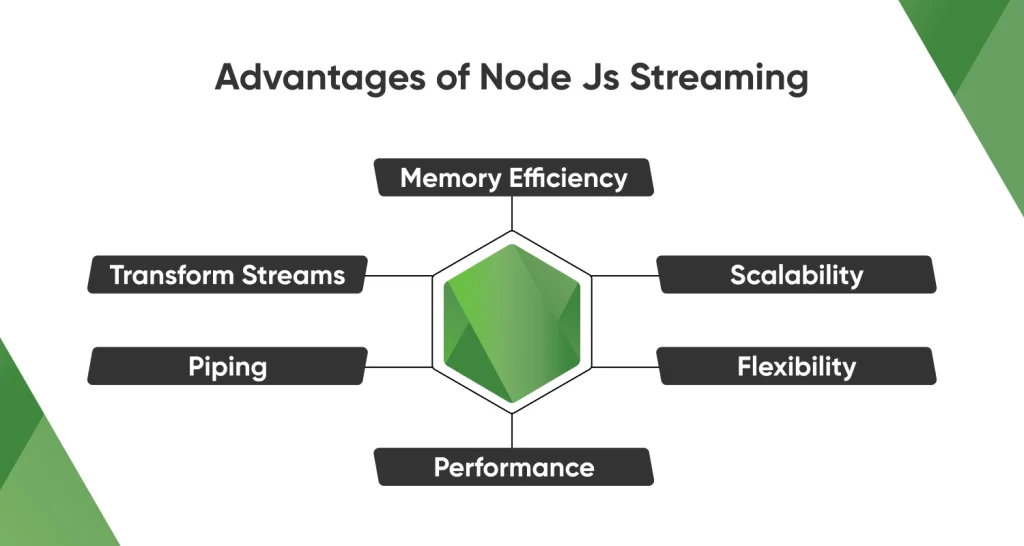

Advantages of Node Js Streaming

Node.js streams are a powerful feature that allows developers to handle data flow efficiently, especially when dealing with large data sets. In this section, we will discuss the advantages of using Node.js streams in detail.

- Memory efficiency: One of the biggest advantages of using Node.js streams is that they enable memory-efficient processing of large data sets. This is because streams allow data to be processed piece by piece, rather than reading and processing all the data at once. This can help to prevent memory overflow and reduce the risk of performance issues.

- Scalability: Node.js streams are also scalable, which means they can handle large amounts of data and still maintain high performance. This is particularly important for real-time applications and other scenarios where data needs to be processed quickly and efficiently.

- Flexibility: Node.js streams are also very flexible and can be used for a variety of purposes, from reading and writing files to processing real-time data streams. This makes them a great choice for developers looking to build applications that require a high degree of flexibility and versatility.

- Performance: Node.js streams are designed for high performance and can help to improve the overall performance of an application. By using streams, developers can reduce the amount of time it takes to process data and improve the responsiveness of their applications.

- Piping: Another advantage of using Node.js streams is that they can be easily piped together, which allows data to be processed and passed from one stream to another seamlessly. This makes it easy to create complex data processing pipelines and allows developers to write more modular and reusable code.

- Transform streams: Transform streams are a type of stream that allows data to be modified as it flows through the stream. This can be useful for tasks such as data compression, encryption, or manipulation. For example, a developer could use a transform stream, like a Node.js transform stream example, to convert data from one format to another, such as from JSON to XML. Here’s an example of a transform stream in action:

const { Transform } = require('stream');

const { createReadStream, createWriteStream } = require('fs');

class UpperCaseTransform extends Transform {

constructor(options) {

super(options);

}

_transform(chunk, encoding, callback) {

const upperCaseChunk = chunk.toString().toUpperCase();

this.push(upperCaseChunk);

callback();

}

}

const readStream = createReadStream('./input.txt');

const writeStream = createWriteStream('./output.txt');

const upperCaseTransform = new UpperCaseTransform();

readStream.pipe(upperCaseTransform).pipe(writeStream);In this example, we define a custom UpperCaseTransform stream that converts all data to uppercase. We then use this stream to transform the data from a file input.txt to output.txt.

Node.js streams are a powerful feature that provides many advantages to developers. From memory efficiency and scalability to flexibility and performance, Node.js streams can help to improve the overall quality and performance of an application. Additionally, the ability to pipe streams together and use transform streams allows developers to create complex data processing pipelines and write more modular and reusable code. By following Node.js best practices and leveraging the power of streams, developers can build efficient, scalable, and high-performing applications.

Piping in Node Streams

In Node.js, streams can be piped together to enable data to flow seamlessly from one stream to another. Piping streams can help reduce the complexity of data processing and make code more readable and reusable.

To pipe streams together, you first need to create a readable stream using the ‘createReadStream’ method. This method takes a file path as an argument and returns a readable stream object. Here’s an example:

const { createReadStream } = require('fs');

const readStream = createReadStream('file.txt');Once you have a readable stream, you can ‘pipe’ it to another stream using the pipe method. The ‘pipe’ method takes a writable stream as an argument and returns the writable stream. Here’s an example of piping a readable stream to a writable stream:

const { createReadStream, createWriteStream } = require('fs');

const readStream = createReadStream('file.txt');

const writeStream = createWriteStream('output.txt');

readStream.pipe(writeStream);In this example, we create a readable stream using the ‘createReadStream’ method and a writable stream using the ‘createWriteStream’ method. We then pipe the readable stream to the writable stream using the ‘pipe’ method.

By piping streams together, you can easily create complex data processing pipelines and reduce the amount of code needed to accomplish tasks. This can help to make your code more maintainable and scalable, especially when working with large data sets.

You may like this: Best Node.js Development Trends

Node Js Stream Chaining

In Node.js, stream chaining is the process of connecting multiple streams together to create a data processing pipeline. This allows for more complex data processing scenarios than just piping two streams together.

Stream chaining involves creating a sequence of streams, where each stream performs a specific operation on the data before passing it to the next stream in the pipeline. For example, you might have a nodejs file stream that reads data from a file, followed by a transform stream that modifies the data in some way, and then a write stream that saves the modified data to another file.

Here’s an example of stream chaining in Node.js using a file stream and a transform stream:

const { createReadStream, createWriteStream } = require('fs');

const { Transform } = require('stream');

const readStream = createReadStream('input.txt');

const writeStream = createWriteStream('output.txt');

const transformStream = new Transform({

transform(chunk, encoding, callback) {

const modifiedChunk = chunk.toString().toUpperCase();

callback(null, modifiedChunk);

}

});

readStream

.pipe(transformStream)

.pipe(writeStream);In this example, we create a read stream from a file and a write stream to another file. We also create a transform stream that converts each chunk of data to uppercase. We then chain the streams together using the pipe method, which connects the output of one stream to the input of the next stream.

Stream chaining is a powerful technique for data processing in Node.js and is commonly used in backend development services. By chaining together multiple streams, developers can create efficient and scalable data processing pipelines that can handle large data sets with ease.

Conclusion

Node.js streams provide a powerful and flexible way to handle data processing in Node.js applications. With various types of streams, developers can easily read, write, and transform data, and stream chaining allows for complex data processing pipelines. Stream processing provides a significant performance boost in processing large volumes of data. By implementing Node.js streams and following best practices, developers can create efficient, scalable, and maintainable applications.

Frequently Asked Questions

What is the primary difference between Node.js stream and stream2?

The primary difference between Node.js stream and stream2 is that stream2 includes object mode streams and a new implementation of transform streams.

What is meant by the stream in Node?

A stream in Node.js is a continuous flow of data between a source and a destination, processed incrementally in small chunks rather than as a whole.

How to use Streams in Node.js?

In Node.js, you can use streams by creating a readable, writable, or transform stream, piping streams together, and chaining streams to create a data processing pipeline.